forked from Github/frigate

Compare commits

103 Commits

v0.12.0-be

...

v0.12.1

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

367d7244d3 | ||

|

|

deec5b8bfd | ||

|

|

39bf6c44bc | ||

|

|

76dbab6a8b | ||

|

|

6634be1f79 | ||

|

|

5951a740d2 | ||

|

|

305323c9e9 | ||

|

|

85015d9409 | ||

|

|

82aa238eca | ||

|

|

cbd07696b5 | ||

|

|

df016ddd0d | ||

|

|

6bcf44aee8 | ||

|

|

83aad5262a | ||

|

|

35ddc896fa | ||

|

|

ba6794fb99 | ||

|

|

7960090409 | ||

|

|

9b109a7d14 | ||

|

|

03d37fe830 | ||

|

|

433bf690e3 | ||

|

|

c820badb40 | ||

|

|

98384789d4 | ||

|

|

75e0ed38eb | ||

|

|

3b62ff093a | ||

|

|

6e0faa930a | ||

|

|

d6c9538859 | ||

|

|

dee471e9e9 | ||

|

|

e3eae53cb9 | ||

|

|

19a65eaaac | ||

|

|

da3e197534 | ||

|

|

0e61ea7723 | ||

|

|

f855b1a2b3 | ||

|

|

bc16ad1f13 | ||

|

|

1b8cd10142 | ||

|

|

83c80c570c | ||

|

|

4c5cd02ab7 | ||

|

|

7d589bd6e1 | ||

|

|

1bf3b83ef3 | ||

|

|

b61b6f46cd | ||

|

|

ac339d411c | ||

|

|

3f17f871fa | ||

|

|

e454daf727 | ||

|

|

732e527401 | ||

|

|

b44e6cd5dc | ||

|

|

2d9556f5f3 | ||

|

|

e82f72a9d3 | ||

|

|

ce2d589a28 | ||

|

|

750bf0e79a | ||

|

|

4dc6c93cdb | ||

|

|

f7e9507bee | ||

|

|

e8d8cc4f55 | ||

|

|

c20c982ad0 | ||

|

|

962bdc7fa5 | ||

|

|

a5e561c81d | ||

|

|

c4ebafe777 | ||

|

|

7ed715b371 | ||

|

|

161e7b3fd7 | ||

|

|

42eaa13402 | ||

|

|

17c26c9fa9 | ||

|

|

318240c14c | ||

|

|

34bdf2fc10 | ||

|

|

d97fa99ec5 | ||

|

|

a554b22968 | ||

|

|

9621b4b9a1 | ||

|

|

3611e874ca | ||

|

|

fbf29667d4 | ||

|

|

c13dd132ee | ||

|

|

3524d1a055 | ||

|

|

a8c567d877 | ||

|

|

80135342c2 | ||

|

|

c2b13fdbdf | ||

|

|

2797a60d4f | ||

|

|

0592c8b0e2 | ||

|

|

2b685ac343 | ||

|

|

13122fc2b1 | ||

|

|

c901707670 | ||

|

|

27d3676ba5 | ||

|

|

52459bf348 | ||

|

|

6cfa73a284 | ||

|

|

7b26935462 | ||

|

|

c9cd810c9f | ||

|

|

1715e2e09d | ||

|

|

b69c0daadb | ||

|

|

56d2978bc8 | ||

|

|

1ef109e171 | ||

|

|

08ab9dedf7 | ||

|

|

3d90366af2 | ||

|

|

c74c9ff161 | ||

|

|

27a31e731f | ||

|

|

562e2627c2 | ||

|

|

babd976533 | ||

|

|

748815b6ce | ||

|

|

88252e0ae6 | ||

|

|

c0bf69b4bf | ||

|

|

b6b10e753f | ||

|

|

4a45089b95 | ||

|

|

3b9bcb356b | ||

|

|

e10ddb343c | ||

|

|

e8cd25ddf2 | ||

|

|

624c314335 | ||

|

|

b33094207c | ||

|

|

7083a5c9b6 | ||

|

|

db131d4971 | ||

|

|

74d6ab0555 |

@@ -1,6 +1,6 @@

|

||||

name: EdgeTpu Support Request

|

||||

description: Support for setting up EdgeTPU in Frigate

|

||||

title: "[EdgeTPU Support]: "

|

||||

name: Detector Support Request

|

||||

description: Support for setting up object detector in Frigate (Coral, OpenVINO, TensorRT, etc.)

|

||||

title: "[Detector Support]: "

|

||||

labels: ["support", "triage"]

|

||||

assignees: []

|

||||

body:

|

||||

5

.github/workflows/ci.yml

vendored

5

.github/workflows/ci.yml

vendored

@@ -19,6 +19,11 @@ jobs:

|

||||

runs-on: ubuntu-latest

|

||||

name: Image Build

|

||||

steps:

|

||||

- name: Remove unnecessary files

|

||||

run: |

|

||||

sudo rm -rf /usr/share/dotnet

|

||||

sudo rm -rf /usr/local/lib/android

|

||||

sudo rm -rf /opt/ghc

|

||||

- id: lowercaseRepo

|

||||

uses: ASzc/change-string-case-action@v5

|

||||

with:

|

||||

|

||||

@@ -27,7 +27,7 @@ RUN --mount=type=tmpfs,target=/tmp --mount=type=tmpfs,target=/var/cache/apt \

|

||||

FROM wget AS go2rtc

|

||||

ARG TARGETARCH

|

||||

WORKDIR /rootfs/usr/local/go2rtc/bin

|

||||

RUN wget -qO go2rtc "https://github.com/AlexxIT/go2rtc/releases/download/v1.1.1/go2rtc_linux_${TARGETARCH}" \

|

||||

RUN wget -qO go2rtc "https://github.com/AlexxIT/go2rtc/releases/download/v1.2.0/go2rtc_linux_${TARGETARCH}" \

|

||||

&& chmod +x go2rtc

|

||||

|

||||

|

||||

@@ -207,6 +207,10 @@ FROM deps AS devcontainer

|

||||

# But start a fake service for simulating the logs

|

||||

COPY docker/fake_frigate_run /etc/s6-overlay/s6-rc.d/frigate/run

|

||||

|

||||

# Create symbolic link to the frigate source code, as go2rtc's create_config.sh uses it

|

||||

RUN mkdir -p /opt/frigate \

|

||||

&& ln -svf /workspace/frigate/frigate /opt/frigate/frigate

|

||||

|

||||

# Install Node 16

|

||||

RUN apt-get update \

|

||||

&& apt-get install wget -y \

|

||||

|

||||

2

Makefile

2

Makefile

@@ -1,7 +1,7 @@

|

||||

default_target: local

|

||||

|

||||

COMMIT_HASH := $(shell git log -1 --pretty=format:"%h"|tail -1)

|

||||

VERSION = 0.12.0

|

||||

VERSION = 0.12.1

|

||||

IMAGE_REPO ?= ghcr.io/blakeblackshear/frigate

|

||||

CURRENT_UID := $(shell id -u)

|

||||

CURRENT_GID := $(shell id -g)

|

||||

|

||||

@@ -17,8 +17,9 @@ apt-get -qq install --no-install-recommends -y \

|

||||

mkdir -p -m 600 /root/.gnupg

|

||||

|

||||

# add coral repo

|

||||

wget --quiet -O /usr/share/keyrings/google-edgetpu.gpg https://packages.cloud.google.com/apt/doc/apt-key.gpg

|

||||

echo "deb [signed-by=/usr/share/keyrings/google-edgetpu.gpg] https://packages.cloud.google.com/apt coral-edgetpu-stable main" | tee /etc/apt/sources.list.d/coral-edgetpu.list

|

||||

curl -fsSLo - https://packages.cloud.google.com/apt/doc/apt-key.gpg | \

|

||||

gpg --dearmor -o /etc/apt/trusted.gpg.d/google-cloud-packages-archive-keyring.gpg

|

||||

echo "deb https://packages.cloud.google.com/apt coral-edgetpu-stable main" | tee /etc/apt/sources.list.d/coral-edgetpu.list

|

||||

echo "libedgetpu1-max libedgetpu/accepted-eula select true" | debconf-set-selections

|

||||

|

||||

# enable non-free repo

|

||||

@@ -64,6 +65,9 @@ if [[ "${TARGETARCH}" == "amd64" ]]; then

|

||||

apt-get -qq install --no-install-recommends --no-install-suggests -y \

|

||||

intel-opencl-icd \

|

||||

mesa-va-drivers libva-drm2 intel-media-va-driver-non-free i965-va-driver libmfx1 radeontop intel-gpu-tools

|

||||

# something about this dependency requires it to be installed in a separate call rather than in the line above

|

||||

apt-get -qq install --no-install-recommends --no-install-suggests -y \

|

||||

i965-va-driver-shaders

|

||||

rm -f /etc/apt/sources.list.d/debian-testing.list

|

||||

fi

|

||||

|

||||

|

||||

@@ -2,7 +2,7 @@

|

||||

|

||||

set -euxo pipefail

|

||||

|

||||

s6_version="3.1.3.0"

|

||||

s6_version="3.1.4.1"

|

||||

|

||||

if [[ "${TARGETARCH}" == "amd64" ]]; then

|

||||

s6_arch="x86_64"

|

||||

|

||||

@@ -4,6 +4,8 @@

|

||||

|

||||

set -o errexit -o nounset -o pipefail

|

||||

|

||||

# Logs should be sent to stdout so that s6 can collect them

|

||||

|

||||

declare exit_code_container

|

||||

exit_code_container=$(cat /run/s6-linux-init-container-results/exitcode)

|

||||

readonly exit_code_container

|

||||

@@ -11,20 +13,16 @@ readonly exit_code_service="${1}"

|

||||

readonly exit_code_signal="${2}"

|

||||

readonly service="Frigate"

|

||||

|

||||

echo "Service ${service} exited with code ${exit_code_service} (by signal ${exit_code_signal})" >&2

|

||||

echo "[INFO] Service ${service} exited with code ${exit_code_service} (by signal ${exit_code_signal})"

|

||||

|

||||

if [[ "${exit_code_service}" -eq 256 ]]; then

|

||||

if [[ "${exit_code_container}" -eq 0 ]]; then

|

||||

echo $((128 + exit_code_signal)) > /run/s6-linux-init-container-results/exitcode

|

||||

echo $((128 + exit_code_signal)) >/run/s6-linux-init-container-results/exitcode

|

||||

fi

|

||||

elif [[ "${exit_code_service}" -ne 0 ]]; then

|

||||

if [[ "${exit_code_container}" -eq 0 ]]; then

|

||||

echo "${exit_code_service}" > /run/s6-linux-init-container-results/exitcode

|

||||

echo "${exit_code_service}" >/run/s6-linux-init-container-results/exitcode

|

||||

fi

|

||||

else

|

||||

# Exit code 0 is expected when Frigate is restarted by the user. In this case,

|

||||

# we create a signal for the go2rtc finish script to tolerate the restart.

|

||||

touch /dev/shm/restarting-frigate

|

||||

fi

|

||||

|

||||

exec /run/s6/basedir/bin/halt

|

||||

|

||||

@@ -4,12 +4,14 @@

|

||||

|

||||

set -o errexit -o nounset -o pipefail

|

||||

|

||||

# Logs should be sent to stdout so that s6 can collect them

|

||||

|

||||

# Tell S6-Overlay not to restart this service

|

||||

s6-svc -O .

|

||||

|

||||

echo "[INFO] Starting Frigate..." >&2

|

||||

echo "[INFO] Starting Frigate..."

|

||||

|

||||

cd /opt/frigate || echo "[ERROR] Failed to change working directory to /opt/frigate" >&2

|

||||

cd /opt/frigate || echo "[ERROR] Failed to change working directory to /opt/frigate"

|

||||

|

||||

# Replace the bash process with the Frigate process, redirecting stderr to stdout

|

||||

exec 2>&1

|

||||

|

||||

12

docker/rootfs/etc/s6-overlay/s6-rc.d/go2rtc-healthcheck/finish

Executable file

12

docker/rootfs/etc/s6-overlay/s6-rc.d/go2rtc-healthcheck/finish

Executable file

@@ -0,0 +1,12 @@

|

||||

#!/command/with-contenv bash

|

||||

# shellcheck shell=bash

|

||||

|

||||

set -o errexit -o nounset -o pipefail

|

||||

|

||||

# Logs should be sent to stdout so that s6 can collect them

|

||||

|

||||

readonly exit_code_service="${1}"

|

||||

readonly exit_code_signal="${2}"

|

||||

readonly service="go2rtc-healthcheck"

|

||||

|

||||

echo "[INFO] The ${service} service exited with code ${exit_code_service} (by signal ${exit_code_signal})"

|

||||

@@ -0,0 +1 @@

|

||||

go2rtc-log

|

||||

22

docker/rootfs/etc/s6-overlay/s6-rc.d/go2rtc-healthcheck/run

Executable file

22

docker/rootfs/etc/s6-overlay/s6-rc.d/go2rtc-healthcheck/run

Executable file

@@ -0,0 +1,22 @@

|

||||

#!/command/with-contenv bash

|

||||

# shellcheck shell=bash

|

||||

# Start the go2rtc-healthcheck service

|

||||

|

||||

set -o errexit -o nounset -o pipefail

|

||||

|

||||

# Logs should be sent to stdout so that s6 can collect them

|

||||

|

||||

# Give some additional time for go2rtc to start before start pinging

|

||||

sleep 10s

|

||||

echo "[INFO] Starting go2rtc healthcheck service..."

|

||||

|

||||

while sleep 30s; do

|

||||

# Check if the service is running

|

||||

if ! curl --connect-timeout 10 --fail --silent --show-error --output /dev/null http://127.0.0.1:1984/api/streams 2>&1; then

|

||||

echo "[ERROR] The go2rtc service is not responding to ping, restarting..."

|

||||

# We can also use -r instead of -t to send kill signal rather than term

|

||||

s6-svc -t /var/run/service/go2rtc 2>&1

|

||||

# Give some additional time to go2rtc to restart before start pinging again

|

||||

sleep 10s

|

||||

fi

|

||||

done

|

||||

@@ -0,0 +1 @@

|

||||

5000

|

||||

@@ -0,0 +1 @@

|

||||

longrun

|

||||

@@ -1 +1,2 @@

|

||||

go2rtc

|

||||

go2rtc-healthcheck

|

||||

|

||||

@@ -1,32 +1,12 @@

|

||||

#!/command/with-contenv bash

|

||||

# shellcheck shell=bash

|

||||

# Take down the S6 supervision tree when the service exits

|

||||

|

||||

set -o errexit -o nounset -o pipefail

|

||||

|

||||

declare exit_code_container

|

||||

exit_code_container=$(cat /run/s6-linux-init-container-results/exitcode)

|

||||

readonly exit_code_container

|

||||

# Logs should be sent to stdout so that s6 can collect them

|

||||

|

||||

readonly exit_code_service="${1}"

|

||||

readonly exit_code_signal="${2}"

|

||||

readonly service="go2rtc"

|

||||

|

||||

echo "Service ${service} exited with code ${exit_code_service} (by signal ${exit_code_signal})" >&2

|

||||

|

||||

if [[ "${exit_code_service}" -eq 256 ]]; then

|

||||

if [[ "${exit_code_container}" -eq 0 ]]; then

|

||||

echo $((128 + exit_code_signal)) > /run/s6-linux-init-container-results/exitcode

|

||||

fi

|

||||

elif [[ "${exit_code_service}" -ne 0 ]]; then

|

||||

if [[ "${exit_code_container}" -eq 0 ]]; then

|

||||

echo "${exit_code_service}" > /run/s6-linux-init-container-results/exitcode

|

||||

fi

|

||||

else

|

||||

# go2rtc is not supposed to exit, so even when it exits with 0 we make the

|

||||

# container with 1. We only tolerate it when Frigate is restarting.

|

||||

if [[ "${exit_code_container}" -eq 0 && ! -f /dev/shm/restarting-frigate ]]; then

|

||||

echo "1" > /run/s6-linux-init-container-results/exitcode

|

||||

fi

|

||||

fi

|

||||

|

||||

exec /run/s6/basedir/bin/halt

|

||||

echo "[INFO] The ${service} service exited with code ${exit_code_service} (by signal ${exit_code_signal})"

|

||||

|

||||

@@ -4,8 +4,7 @@

|

||||

|

||||

set -o errexit -o nounset -o pipefail

|

||||

|

||||

# Tell S6-Overlay not to restart this service

|

||||

s6-svc -O .

|

||||

# Logs should be sent to stdout so that s6 can collect them

|

||||

|

||||

function get_ip_and_port_from_supervisor() {

|

||||

local ip_address

|

||||

@@ -19,9 +18,9 @@ function get_ip_and_port_from_supervisor() {

|

||||

jq --exit-status --raw-output '.data.ipv4.address[0]'

|

||||

) && [[ "${ip_address}" =~ ${ip_regex} ]]; then

|

||||

ip_address="${BASH_REMATCH[1]}"

|

||||

echo "[INFO] Got IP address from supervisor: ${ip_address}" >&2

|

||||

echo "[INFO] Got IP address from supervisor: ${ip_address}"

|

||||

else

|

||||

echo "[WARN] Failed to get IP address from supervisor" >&2

|

||||

echo "[WARN] Failed to get IP address from supervisor"

|

||||

return 0

|

||||

fi

|

||||

|

||||

@@ -35,26 +34,37 @@ function get_ip_and_port_from_supervisor() {

|

||||

jq --exit-status --raw-output '.data.network["8555/tcp"]'

|

||||

) && [[ "${webrtc_port}" =~ ${port_regex} ]]; then

|

||||

webrtc_port="${BASH_REMATCH[1]}"

|

||||

echo "[INFO] Got WebRTC port from supervisor: ${ip_address}" >&2

|

||||

echo "[INFO] Got WebRTC port from supervisor: ${webrtc_port}"

|

||||

else

|

||||

echo "[WARN] Failed to get WebRTC port from supervisor" >&2

|

||||

echo "[WARN] Failed to get WebRTC port from supervisor"

|

||||

return 0

|

||||

fi

|

||||

|

||||

export FRIGATE_GO2RTC_WEBRTC_CANDIDATE_INTERNAL="${ip_address}:${webrtc_port}"

|

||||

}

|

||||

|

||||

echo "[INFO] Preparing go2rtc config..." >&2

|

||||

if [[ ! -f "/dev/shm/go2rtc.yaml" ]]; then

|

||||

echo "[INFO] Preparing go2rtc config..."

|

||||

|

||||

if [[ -n "${SUPERVISOR_TOKEN:-}" ]]; then

|

||||

# Running as a Home Assistant add-on, infer the IP address and port

|

||||

get_ip_and_port_from_supervisor

|

||||

if [[ -n "${SUPERVISOR_TOKEN:-}" ]]; then

|

||||

# Running as a Home Assistant add-on, infer the IP address and port

|

||||

get_ip_and_port_from_supervisor

|

||||

fi

|

||||

|

||||

python3 /usr/local/go2rtc/create_config.py

|

||||

fi

|

||||

|

||||

raw_config=$(python3 /usr/local/go2rtc/create_config.py)

|

||||

readonly config_path="/config"

|

||||

|

||||

echo "[INFO] Starting go2rtc..." >&2

|

||||

if [[ -x "${config_path}/go2rtc" ]]; then

|

||||

readonly binary_path="${config_path}/go2rtc"

|

||||

echo "[WARN] Using go2rtc binary from '${binary_path}' instead of the embedded one"

|

||||

else

|

||||

readonly binary_path="/usr/local/go2rtc/bin/go2rtc"

|

||||

fi

|

||||

|

||||

echo "[INFO] Starting go2rtc..."

|

||||

|

||||

# Replace the bash process with the go2rtc process, redirecting stderr to stdout

|

||||

exec 2>&1

|

||||

exec go2rtc -config="${raw_config}"

|

||||

exec "${binary_path}" -config=/dev/shm/go2rtc.yaml

|

||||

|

||||

@@ -4,6 +4,8 @@

|

||||

|

||||

set -o errexit -o nounset -o pipefail

|

||||

|

||||

# Logs should be sent to stdout so that s6 can collect them

|

||||

|

||||

declare exit_code_container

|

||||

exit_code_container=$(cat /run/s6-linux-init-container-results/exitcode)

|

||||

readonly exit_code_container

|

||||

@@ -11,18 +13,18 @@ readonly exit_code_service="${1}"

|

||||

readonly exit_code_signal="${2}"

|

||||

readonly service="NGINX"

|

||||

|

||||

echo "Service ${service} exited with code ${exit_code_service} (by signal ${exit_code_signal})" >&2

|

||||

echo "[INFO] Service ${service} exited with code ${exit_code_service} (by signal ${exit_code_signal})"

|

||||

|

||||

if [[ "${exit_code_service}" -eq 256 ]]; then

|

||||

if [[ "${exit_code_container}" -eq 0 ]]; then

|

||||

echo $((128 + exit_code_signal)) > /run/s6-linux-init-container-results/exitcode

|

||||

echo $((128 + exit_code_signal)) >/run/s6-linux-init-container-results/exitcode

|

||||

fi

|

||||

if [[ "${exit_code_signal}" -eq 15 ]]; then

|

||||

exec /run/s6/basedir/bin/halt

|

||||

fi

|

||||

elif [[ "${exit_code_service}" -ne 0 ]]; then

|

||||

if [[ "${exit_code_container}" -eq 0 ]]; then

|

||||

echo "${exit_code_service}" > /run/s6-linux-init-container-results/exitcode

|

||||

echo "${exit_code_service}" >/run/s6-linux-init-container-results/exitcode

|

||||

fi

|

||||

exec /run/s6/basedir/bin/halt

|

||||

fi

|

||||

|

||||

@@ -4,7 +4,9 @@

|

||||

|

||||

set -o errexit -o nounset -o pipefail

|

||||

|

||||

echo "[INFO] Starting NGINX..." >&2

|

||||

# Logs should be sent to stdout so that s6 can collect them

|

||||

|

||||

echo "[INFO] Starting NGINX..."

|

||||

|

||||

# Replace the bash process with the NGINX process, redirecting stderr to stdout

|

||||

exec 2>&1

|

||||

|

||||

@@ -1,5 +0,0 @@

|

||||

#!/command/with-contenv bash

|

||||

# shellcheck shell=bash

|

||||

|

||||

exec 2>&1

|

||||

exec python3 -u -m frigate "${@}"

|

||||

@@ -5,8 +5,13 @@ import os

|

||||

import sys

|

||||

import yaml

|

||||

|

||||

sys.path.insert(0, "/opt/frigate")

|

||||

from frigate.const import BIRDSEYE_PIPE, BTBN_PATH

|

||||

from frigate.ffmpeg_presets import parse_preset_hardware_acceleration_encode

|

||||

|

||||

sys.path.remove("/opt/frigate")

|

||||

|

||||

|

||||

BTBN_PATH = "/usr/lib/btbn-ffmpeg"

|

||||

FRIGATE_ENV_VARS = {k: v for k, v in os.environ.items() if k.startswith("FRIGATE_")}

|

||||

config_file = os.environ.get("CONFIG_FILE", "/config/config.yml")

|

||||

|

||||

@@ -19,12 +24,18 @@ with open(config_file) as f:

|

||||

raw_config = f.read()

|

||||

|

||||

if config_file.endswith((".yaml", ".yml")):

|

||||

config = yaml.safe_load(raw_config)

|

||||

config: dict[str, any] = yaml.safe_load(raw_config)

|

||||

elif config_file.endswith(".json"):

|

||||

config = json.loads(raw_config)

|

||||

config: dict[str, any] = json.loads(raw_config)

|

||||

|

||||

go2rtc_config: dict[str, any] = config.get("go2rtc", {})

|

||||

|

||||

# Need to enable CORS for go2rtc so the frigate integration / card work automatically

|

||||

if go2rtc_config.get("api") is None:

|

||||

go2rtc_config["api"] = {"origin": "*"}

|

||||

elif go2rtc_config["api"].get("origin") is None:

|

||||

go2rtc_config["api"]["origin"] = "*"

|

||||

|

||||

# we want to ensure that logs are easy to read

|

||||

if go2rtc_config.get("log") is None:

|

||||

go2rtc_config["log"] = {"format": "text"}

|

||||

@@ -34,7 +45,9 @@ elif go2rtc_config["log"].get("format") is None:

|

||||

if not go2rtc_config.get("webrtc", {}).get("candidates", []):

|

||||

default_candidates = []

|

||||

# use internal candidate if it was discovered when running through the add-on

|

||||

internal_candidate = os.environ.get("FRIGATE_GO2RTC_WEBRTC_CANDIDATE_INTERNAL", None)

|

||||

internal_candidate = os.environ.get(

|

||||

"FRIGATE_GO2RTC_WEBRTC_CANDIDATE_INTERNAL", None

|

||||

)

|

||||

if internal_candidate is not None:

|

||||

default_candidates.append(internal_candidate)

|

||||

# should set default stun server so webrtc can work

|

||||

@@ -42,8 +55,10 @@ if not go2rtc_config.get("webrtc", {}).get("candidates", []):

|

||||

|

||||

go2rtc_config["webrtc"] = {"candidates": default_candidates}

|

||||

else:

|

||||

print("[INFO] Not injecting WebRTC candidates into go2rtc config as it has been set manually", file=sys.stderr)

|

||||

|

||||

print(

|

||||

"[INFO] Not injecting WebRTC candidates into go2rtc config as it has been set manually",

|

||||

)

|

||||

|

||||

# sets default RTSP response to be equivalent to ?video=h264,h265&audio=aac

|

||||

# this means user does not need to specify audio codec when using restream

|

||||

# as source for frigate and the integration supports HLS playback

|

||||

@@ -62,14 +77,30 @@ if not os.path.exists(BTBN_PATH):

|

||||

go2rtc_config["ffmpeg"][

|

||||

"rtsp"

|

||||

] = "-fflags nobuffer -flags low_delay -stimeout 5000000 -user_agent go2rtc/ffmpeg -rtsp_transport tcp -i {input}"

|

||||

|

||||

|

||||

for name in go2rtc_config.get("streams", {}):

|

||||

stream = go2rtc_config["streams"][name]

|

||||

|

||||

if isinstance(stream, str):

|

||||

go2rtc_config["streams"][name] = go2rtc_config["streams"][name].format(**FRIGATE_ENV_VARS)

|

||||

go2rtc_config["streams"][name] = go2rtc_config["streams"][name].format(

|

||||

**FRIGATE_ENV_VARS

|

||||

)

|

||||

elif isinstance(stream, list):

|

||||

for i, stream in enumerate(stream):

|

||||

go2rtc_config["streams"][name][i] = stream.format(**FRIGATE_ENV_VARS)

|

||||

|

||||

print(json.dumps(go2rtc_config))

|

||||

# add birdseye restream stream if enabled

|

||||

if config.get("birdseye", {}).get("restream", False):

|

||||

birdseye: dict[str, any] = config.get("birdseye")

|

||||

|

||||

input = f"-f rawvideo -pix_fmt yuv420p -video_size {birdseye.get('width', 1280)}x{birdseye.get('height', 720)} -r 10 -i {BIRDSEYE_PIPE}"

|

||||

ffmpeg_cmd = f"exec:{parse_preset_hardware_acceleration_encode(config.get('ffmpeg', {}).get('hwaccel_args'), input, '-rtsp_transport tcp -f rtsp {output}')}"

|

||||

|

||||

if go2rtc_config.get("streams"):

|

||||

go2rtc_config["streams"]["birdseye"] = ffmpeg_cmd

|

||||

else:

|

||||

go2rtc_config["streams"] = {"birdseye": ffmpeg_cmd}

|

||||

|

||||

# Write go2rtc_config to /dev/shm/go2rtc.yaml

|

||||

with open("/dev/shm/go2rtc.yaml", "w") as f:

|

||||

yaml.dump(go2rtc_config, f)

|

||||

|

||||

@@ -108,3 +108,14 @@ To do this:

|

||||

3. Restart Frigate and the custom version will be used if the mapping was done correctly.

|

||||

|

||||

NOTE: The folder that is mapped from the host needs to be the folder that contains `/bin`. So if the full structure is `/home/appdata/frigate/custom-ffmpeg/bin/ffmpeg` then `/home/appdata/frigate/custom-ffmpeg` needs to be mapped to `/usr/lib/btbn-ffmpeg`.

|

||||

|

||||

## Custom go2rtc version

|

||||

|

||||

Frigate currently includes go2rtc v1.2.0, there may be certain cases where you want to run a different version of go2rtc.

|

||||

|

||||

To do this:

|

||||

|

||||

1. Download the go2rtc build to the /config folder.

|

||||

2. Rename the build to `go2rtc`.

|

||||

3. Give `go2rtc` execute permission.

|

||||

4. Restart Frigate and the custom version will be used, you can verify by checking go2rtc logs.

|

||||

|

||||

@@ -16,7 +16,7 @@ Note that mjpeg cameras require encoding the video into h264 for recording, and

|

||||

```yaml

|

||||

go2rtc:

|

||||

streams:

|

||||

mjpeg_cam: ffmpeg:{your_mjpeg_stream_url}#video=h264#hardware # <- use hardware acceleration to create an h264 stream usable for other components.

|

||||

mjpeg_cam: "ffmpeg:{your_mjpeg_stream_url}#video=h264#hardware" # <- use hardware acceleration to create an h264 stream usable for other components.

|

||||

|

||||

cameras:

|

||||

...

|

||||

@@ -110,7 +110,7 @@ go2rtc:

|

||||

streams:

|

||||

reolink:

|

||||

- http://reolink_ip/flv?port=1935&app=bcs&stream=channel0_main.bcs&user=username&password=password

|

||||

- ffmpeg:reolink#audio=opus

|

||||

- "ffmpeg:reolink#audio=opus"

|

||||

reolink_sub:

|

||||

- http://reolink_ip/flv?port=1935&app=bcs&stream=channel0_ext.bcs&user=username&password=password

|

||||

|

||||

@@ -126,19 +126,28 @@ cameras:

|

||||

input_args: preset-rtsp-restream

|

||||

roles:

|

||||

- detect

|

||||

detect:

|

||||

width: 896

|

||||

height: 672

|

||||

fps: 7

|

||||

```

|

||||

|

||||

### Unifi Protect Cameras

|

||||

|

||||

In the Unifi 2.0 update Unifi Protect Cameras had a change in audio sample rate which causes issues for ffmpeg. The input rate needs to be set for record and rtmp.

|

||||

Unifi protect cameras require the rtspx stream to be used with go2rtc.

|

||||

To utilize a Unifi protect camera, modify the rtsps link to begin with rtspx.

|

||||

Additionally, remove the "?enableSrtp" from the end of the Unifi link.

|

||||

|

||||

```yaml

|

||||

go2rtc:

|

||||

streams:

|

||||

front:

|

||||

- rtspx://192.168.1.1:7441/abcdefghijk

|

||||

```

|

||||

|

||||

[See the go2rtc docs for more information](https://github.com/AlexxIT/go2rtc/tree/v1.2.0#source-rtsp)

|

||||

|

||||

In the Unifi 2.0 update Unifi Protect Cameras had a change in audio sample rate which causes issues for ffmpeg. The input rate needs to be set for record and rtmp if used directly with unifi protect.

|

||||

|

||||

```yaml

|

||||

ffmpeg:

|

||||

output_args:

|

||||

record: preset-record-ubiquiti

|

||||

rtmp: preset-rtmp-ubiquiti

|

||||

rtmp: preset-rtmp-ubiquiti # recommend using go2rtc instead

|

||||

```

|

||||

|

||||

@@ -101,7 +101,7 @@ The OpenVINO device to be used is specified using the `"device"` attribute accor

|

||||

|

||||

OpenVINO is supported on 6th Gen Intel platforms (Skylake) and newer. A supported Intel platform is required to use the `GPU` device with OpenVINO. The `MYRIAD` device may be run on any platform, including Arm devices. For detailed system requirements, see [OpenVINO System Requirements](https://www.intel.com/content/www/us/en/developer/tools/openvino-toolkit/system-requirements.html)

|

||||

|

||||

An OpenVINO model is provided in the container at `/openvino-model/ssdlite_mobilenet_v2.xml` and is used by this detector type by default. The model comes from Intel's Open Model Zoo [SSDLite MobileNet V2](https://github.com/openvinotoolkit/open_model_zoo/tree/master/models/public/ssdlite_mobilenet_v2) and is converted to an FP16 precision IR model. Use the model configuration shown below when using the OpenVINO detector.

|

||||

An OpenVINO model is provided in the container at `/openvino-model/ssdlite_mobilenet_v2.xml` and is used by this detector type by default. The model comes from Intel's Open Model Zoo [SSDLite MobileNet V2](https://github.com/openvinotoolkit/open_model_zoo/tree/master/models/public/ssdlite_mobilenet_v2) and is converted to an FP16 precision IR model. Use the model configuration shown below when using the OpenVINO detector with the default model.

|

||||

|

||||

```yaml

|

||||

detectors:

|

||||

@@ -119,6 +119,25 @@ model:

|

||||

labelmap_path: /openvino-model/coco_91cl_bkgr.txt

|

||||

```

|

||||

|

||||

This detector also supports some YOLO variants: YOLOX, YOLOv5, and YOLOv8 specifically. Other YOLO variants are not officially supported/tested. Frigate does not come with any yolo models preloaded, so you will need to supply your own models. This detector has been verified to work with the [yolox_tiny](https://github.com/openvinotoolkit/open_model_zoo/tree/master/models/public/yolox-tiny) model from Intel's Open Model Zoo. You can follow [these instructions](https://github.com/openvinotoolkit/open_model_zoo/tree/master/models/public/yolox-tiny#download-a-model-and-convert-it-into-openvino-ir-format) to retrieve the OpenVINO-compatible `yolox_tiny` model. Make sure that the model input dimensions match the `width` and `height` parameters, and `model_type` is set accordingly. See [Full Configuration Reference](/configuration/index.md#full-configuration-reference) for a list of possible `model_type` options. Below is an example of how `yolox_tiny` can be used in Frigate:

|

||||

|

||||

```yaml

|

||||

detectors:

|

||||

ov:

|

||||

type: openvino

|

||||

device: AUTO

|

||||

model:

|

||||

path: /path/to/yolox_tiny.xml

|

||||

|

||||

model:

|

||||

width: 416

|

||||

height: 416

|

||||

input_tensor: nchw

|

||||

input_pixel_format: bgr

|

||||

model_type: yolox

|

||||

labelmap_path: /path/to/coco_80cl.txt

|

||||

```

|

||||

|

||||

### Intel NCS2 VPU and Myriad X Setup

|

||||

|

||||

Intel produces a neural net inference accelleration chip called Myriad X. This chip was sold in their Neural Compute Stick 2 (NCS2) which has been discontinued. If intending to use the MYRIAD device for accelleration, additional setup is required to pass through the USB device. The host needs a udev rule installed to handle the NCS2 device.

|

||||

@@ -179,7 +198,7 @@ To generate model files, create a new folder to save the models, download the sc

|

||||

|

||||

```bash

|

||||

mkdir trt-models

|

||||

wget https://raw.githubusercontent.com/blakeblackshear/frigate/docker/tensorrt_models.sh

|

||||

wget https://github.com/blakeblackshear/frigate/raw/master/docker/tensorrt_models.sh

|

||||

chmod +x tensorrt_models.sh

|

||||

docker run --gpus=all --rm -it -v `pwd`/trt-models:/tensorrt_models -v `pwd`/tensorrt_models.sh:/tensorrt_models.sh nvcr.io/nvidia/tensorrt:22.07-py3 /tensorrt_models.sh

|

||||

```

|

||||

@@ -212,6 +231,10 @@ yolov4x-mish-320

|

||||

yolov4x-mish-640

|

||||

yolov7-tiny-288

|

||||

yolov7-tiny-416

|

||||

yolov7-640

|

||||

yolov7-320

|

||||

yolov7x-640

|

||||

yolov7x-320

|

||||

```

|

||||

|

||||

### Configuration Parameters

|

||||

|

||||

@@ -28,16 +28,17 @@ Input args presets help make the config more readable and handle use cases for d

|

||||

|

||||

See [the camera specific docs](/configuration/camera_specific.md) for more info on non-standard cameras and recommendations for using them in Frigate.

|

||||

|

||||

| Preset | Usage | Other Notes |

|

||||

| ------------------------- | ------------------------- | --------------------------------------------------- |

|

||||

| preset-http-jpeg-generic | HTTP Live Jpeg | Recommend restreaming live jpeg instead |

|

||||

| preset-http-mjpeg-generic | HTTP Mjpeg Stream | Recommend restreaming mjpeg stream instead |

|

||||

| preset-http-reolink | Reolink HTTP-FLV Stream | Only for reolink http, not when restreaming as rtsp |

|

||||

| preset-rtmp-generic | RTMP Stream | |

|

||||

| preset-rtsp-generic | RTSP Stream | This is the default when nothing is specified |

|

||||

| preset-rtsp-restream | RTSP Stream from restream | Use when using rtsp restream as source |

|

||||

| preset-rtsp-udp | RTSP Stream via UDP | Use when camera is UDP only |

|

||||

| preset-rtsp-blue-iris | Blue Iris RTSP Stream | Use when consuming a stream from Blue Iris |

|

||||

| Preset | Usage | Other Notes |

|

||||

| -------------------------------- | ------------------------- | ------------------------------------------------------------------------------------------------ |

|

||||

| preset-http-jpeg-generic | HTTP Live Jpeg | Recommend restreaming live jpeg instead |

|

||||

| preset-http-mjpeg-generic | HTTP Mjpeg Stream | Recommend restreaming mjpeg stream instead |

|

||||

| preset-http-reolink | Reolink HTTP-FLV Stream | Only for reolink http, not when restreaming as rtsp |

|

||||

| preset-rtmp-generic | RTMP Stream | |

|

||||

| preset-rtsp-generic | RTSP Stream | This is the default when nothing is specified |

|

||||

| preset-rtsp-restream | RTSP Stream from restream | Use for rtsp restream as source for frigate |

|

||||

| preset-rtsp-restream-low-latency | RTSP Stream from restream | Use for rtsp restream as source for frigate to lower latency, may cause issues with some cameras |

|

||||

| preset-rtsp-udp | RTSP Stream via UDP | Use when camera is UDP only |

|

||||

| preset-rtsp-blue-iris | Blue Iris RTSP Stream | Use when consuming a stream from Blue Iris |

|

||||

|

||||

:::caution

|

||||

|

||||

@@ -46,21 +47,22 @@ It is important to be mindful of input args when using restream because you can

|

||||

:::

|

||||

|

||||

```yaml

|

||||

go2rtc:

|

||||

streams:

|

||||

reolink_cam: http://192.168.0.139/flv?port=1935&app=bcs&stream=channel0_main.bcs&user=admin&password=password

|

||||

|

||||

cameras:

|

||||

reolink_cam:

|

||||

ffmpeg:

|

||||

inputs:

|

||||

- path: http://192.168.0.139/flv?port=1935&app=bcs&stream=channel0_ext.bcs&user=admin&password={FRIGATE_CAM_PASSWORD}

|

||||

- path: http://192.168.0.139/flv?port=1935&app=bcs&stream=channel0_ext.bcs&user=admin&password=password

|

||||

input_args: preset-http-reolink

|

||||

roles:

|

||||

- detect

|

||||

- path: rtsp://192.168.0.10:8554/garage

|

||||

- path: rtsp://127.0.0.1:8554/reolink_cam

|

||||

input_args: preset-rtsp-generic

|

||||

roles:

|

||||

- record

|

||||

- path: http://192.168.0.139/flv?port=1935&app=bcs&stream=channel0_main.bcs&user=admin&password={FRIGATE_CAM_PASSWORD}

|

||||

roles:

|

||||

- restream

|

||||

```

|

||||

|

||||

### Output Args Presets

|

||||

|

||||

@@ -15,23 +15,120 @@ ffmpeg:

|

||||

hwaccel_args: preset-rpi-64-h264

|

||||

```

|

||||

|

||||

### Intel-based CPUs (<10th Generation) via Quicksync

|

||||

:::note

|

||||

|

||||

If running Frigate in docker, you either need to run in priviliged mode or be sure to map the /dev/video1x devices to Frigate

|

||||

|

||||

```yaml

|

||||

docker run -d \

|

||||

--name frigate \

|

||||

...

|

||||

--device /dev/video10 \

|

||||

ghcr.io/blakeblackshear/frigate:stable

|

||||

```

|

||||

|

||||

:::

|

||||

|

||||

### Intel-based CPUs

|

||||

|

||||

#### Via VAAPI

|

||||

|

||||

VAAPI supports automatic profile selection so it will work automatically with both H.264 and H.265 streams. VAAPI is recommended for all generations of Intel-based CPUs if QSV does not work.

|

||||

|

||||

```yaml

|

||||

ffmpeg:

|

||||

hwaccel_args: preset-vaapi

|

||||

```

|

||||

|

||||

**NOTICE**: With some of the processors, like the J4125, the default driver `iHD` doesn't seem to work correctly for hardware acceleration. You may need to change the driver to `i965` by adding the following environment variable `LIBVA_DRIVER_NAME=i965` to your docker-compose file or [in the frigate.yml for HA OS users](advanced.md#environment_vars).

|

||||

|

||||

### Intel-based CPUs (>=10th Generation) via Quicksync

|

||||

#### Via Quicksync (>=10th Generation only)

|

||||

|

||||

QSV must be set specifically based on the video encoding of the stream.

|

||||

|

||||

##### H.264 streams

|

||||

|

||||

```yaml

|

||||

ffmpeg:

|

||||

hwaccel_args: preset-intel-qsv-h264

|

||||

```

|

||||

|

||||

##### H.265 streams

|

||||

|

||||

```yaml

|

||||

ffmpeg:

|

||||

hwaccel_args: preset-intel-qsv-h265

|

||||

```

|

||||

|

||||

#### Configuring Intel GPU Stats in Docker

|

||||

|

||||

Additional configuration is needed for the Docker container to be able to access the `intel_gpu_top` command for GPU stats. Three possible changes can be made:

|

||||

|

||||

1. Run the container as privileged.

|

||||

2. Adding the `CAP_PERFMON` capability.

|

||||

3. Setting the `perf_event_paranoid` low enough to allow access to the performance event system.

|

||||

|

||||

##### Run as privileged

|

||||

|

||||

This method works, but it gives more permissions to the container than are actually needed.

|

||||

|

||||

###### Docker Compose - Privileged

|

||||

|

||||

```yaml

|

||||

services:

|

||||

frigate:

|

||||

...

|

||||

image: ghcr.io/blakeblackshear/frigate:stable

|

||||

privileged: true

|

||||

```

|

||||

|

||||

###### Docker Run CLI - Privileged

|

||||

|

||||

```bash

|

||||

docker run -d \

|

||||

--name frigate \

|

||||

...

|

||||

--privileged \

|

||||

ghcr.io/blakeblackshear/frigate:stable

|

||||

```

|

||||

|

||||

##### CAP_PERFMON

|

||||

|

||||

Only recent versions of Docker support the `CAP_PERFMON` capability. You can test to see if yours supports it by running: `docker run --cap-add=CAP_PERFMON hello-world`

|

||||

|

||||

###### Docker Compose - CAP_PERFMON

|

||||

|

||||

```yaml

|

||||

services:

|

||||

frigate:

|

||||

...

|

||||

image: ghcr.io/blakeblackshear/frigate:stable

|

||||

cap_add:

|

||||

- CAP_PERFMON

|

||||

```

|

||||

|

||||

###### Docker Run CLI - CAP_PERFMON

|

||||

|

||||

```bash

|

||||

docker run -d \

|

||||

--name frigate \

|

||||

...

|

||||

--cap-add=CAP_PERFMON \

|

||||

ghcr.io/blakeblackshear/frigate:stable

|

||||

```

|

||||

|

||||

##### perf_event_paranoid

|

||||

|

||||

_Note: This setting must be changed for the entire system._

|

||||

|

||||

For more information on the various values across different distributions, see https://askubuntu.com/questions/1400874/what-does-perf-paranoia-level-four-do.

|

||||

|

||||

Depending on your OS and kernel configuration, you may need to change the `/proc/sys/kernel/perf_event_paranoid` kernel tunable. You can test the change by running `sudo sh -c 'echo 2 >/proc/sys/kernel/perf_event_paranoid'` which will persist until a reboot. Make it permanent by running `sudo sh -c 'echo kernel.perf_event_paranoid=1 >> /etc/sysctl.d/local.conf'`

|

||||

|

||||

### AMD/ATI GPUs (Radeon HD 2000 and newer GPUs) via libva-mesa-driver

|

||||

|

||||

VAAPI supports automatic profile selection so it will work automatically with both H.264 and H.265 streams.

|

||||

|

||||

**Note:** You also need to set `LIBVA_DRIVER_NAME=radeonsi` as an environment variable on the container.

|

||||

|

||||

```yaml

|

||||

@@ -43,15 +140,15 @@ ffmpeg:

|

||||

|

||||

While older GPUs may work, it is recommended to use modern, supported GPUs. NVIDIA provides a [matrix of supported GPUs and features](https://developer.nvidia.com/video-encode-and-decode-gpu-support-matrix-new). If your card is on the list and supports CUVID/NVDEC, it will most likely work with Frigate for decoding. However, you must also use [a driver version that will work with FFmpeg](https://github.com/FFmpeg/nv-codec-headers/blob/master/README). Older driver versions may be missing symbols and fail to work, and older cards are not supported by newer driver versions. The only way around this is to [provide your own FFmpeg](/configuration/advanced#custom-ffmpeg-build) that will work with your driver version, but this is unsupported and may not work well if at all.

|

||||

|

||||

A more complete list of cards and ther compatible drivers is available in the [driver release readme](https://download.nvidia.com/XFree86/Linux-x86_64/525.85.05/README/supportedchips.html).

|

||||

A more complete list of cards and their compatible drivers is available in the [driver release readme](https://download.nvidia.com/XFree86/Linux-x86_64/525.85.05/README/supportedchips.html).

|

||||

|

||||

If your distribution does not offer NVIDIA driver packages, you can [download them here](https://www.nvidia.com/en-us/drivers/unix/).

|

||||

|

||||

#### Docker Configuration

|

||||

#### Configuring Nvidia GPUs in Docker

|

||||

|

||||

Additional configuration is needed for the Docker container to be able to access the NVIDIA GPU. The supported method for this is to install the [NVIDIA Container Toolkit](https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html#docker) and specify the GPU to Docker. How you do this depends on how Docker is being run:

|

||||

|

||||

##### Docker Compose

|

||||

##### Docker Compose - Nvidia GPU

|

||||

|

||||

```yaml

|

||||

services:

|

||||

@@ -68,7 +165,7 @@ services:

|

||||

capabilities: [gpu]

|

||||

```

|

||||

|

||||

##### Docker Run CLI

|

||||

##### Docker Run CLI - Nvidia GPU

|

||||

|

||||

```bash

|

||||

docker run -d \

|

||||

|

||||

@@ -3,7 +3,7 @@ id: index

|

||||

title: Configuration File

|

||||

---

|

||||

|

||||

For Home Assistant Addon installations, the config file needs to be in the root of your Home Assistant config directory (same location as `configuration.yaml`) and named `frigate.yml`.

|

||||

For Home Assistant Addon installations, the config file needs to be in the root of your Home Assistant config directory (same location as `configuration.yaml`). It can be named `frigate.yml` or `frigate.yaml`, but if both files exist `frigate.yaml` will be preferred and `frigate.yml` will be ignored.

|

||||

|

||||

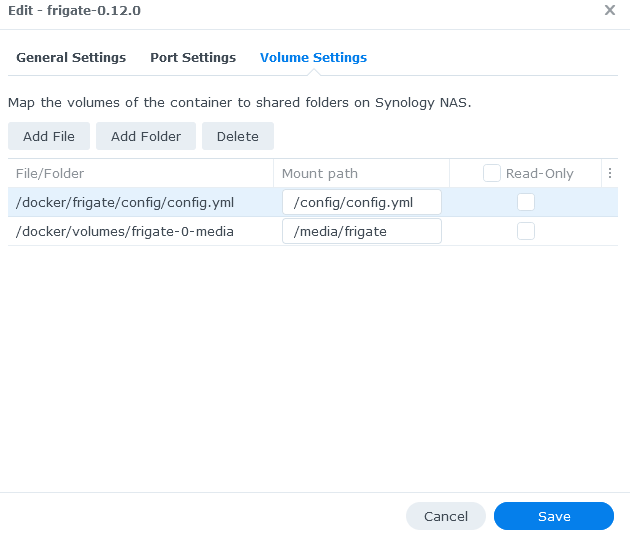

For all other installation types, the config file should be mapped to `/config/config.yml` inside the container.

|

||||

|

||||

@@ -19,7 +19,6 @@ cameras:

|

||||

- path: rtsp://viewer:{FRIGATE_RTSP_PASSWORD}@10.0.10.10:554/cam/realmonitor?channel=1&subtype=2

|

||||

roles:

|

||||

- detect

|

||||

- restream

|

||||

detect:

|

||||

width: 1280

|

||||

height: 720

|

||||

@@ -37,6 +36,25 @@ It is not recommended to copy this full configuration file. Only specify values

|

||||

|

||||

:::

|

||||

|

||||

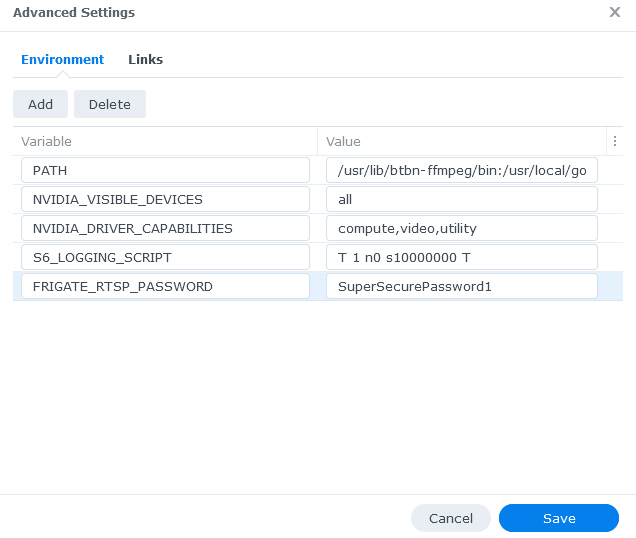

**Note:** The following values will be replaced at runtime by using environment variables

|

||||

|

||||

- `{FRIGATE_MQTT_USER}`

|

||||

- `{FRIGATE_MQTT_PASSWORD}`

|

||||

- `{FRIGATE_RTSP_USER}`

|

||||

- `{FRIGATE_RTSP_PASSWORD}`

|

||||

|

||||

for example:

|

||||

|

||||

```yaml

|

||||

mqtt:

|

||||

user: "{FRIGATE_MQTT_USER}"

|

||||

password: "{FRIGATE_MQTT_PASSWORD}"

|

||||

```

|

||||

|

||||

```yaml

|

||||

- path: rtsp://{FRIGATE_RTSP_USER}:{FRIGATE_RTSP_PASSWORD}@10.0.10.10:8554/unicast

|

||||

```

|

||||

|

||||

```yaml

|

||||

mqtt:

|

||||

# Optional: Enable mqtt server (default: shown below)

|

||||

@@ -105,6 +123,9 @@ model:

|

||||

# Optional: Object detection model input tensor format

|

||||

# Valid values are nhwc or nchw (default: shown below)

|

||||

input_tensor: nhwc

|

||||

# Optional: Object detection model type, currently only used with the OpenVINO detector

|

||||

# Valid values are ssd, yolox, yolov5, or yolov8 (default: shown below)

|

||||

model_type: ssd

|

||||

# Optional: Label name modifications. These are merged into the standard labelmap.

|

||||

labelmap:

|

||||

2: vehicle

|

||||

@@ -146,7 +167,7 @@ birdseye:

|

||||

# More information about presets at https://docs.frigate.video/configuration/ffmpeg_presets

|

||||

ffmpeg:

|

||||

# Optional: global ffmpeg args (default: shown below)

|

||||

global_args: -hide_banner -loglevel warning

|

||||

global_args: -hide_banner -loglevel warning -threads 2

|

||||

# Optional: global hwaccel args (default: shown below)

|

||||

# NOTE: See hardware acceleration docs for your specific device

|

||||

hwaccel_args: []

|

||||

@@ -155,7 +176,7 @@ ffmpeg:

|

||||

# Optional: global output args

|

||||

output_args:

|

||||

# Optional: output args for detect streams (default: shown below)

|

||||

detect: -f rawvideo -pix_fmt yuv420p

|

||||

detect: -threads 2 -f rawvideo -pix_fmt yuv420p

|

||||

# Optional: output args for record streams (default: shown below)

|

||||

record: preset-record-generic

|

||||

# Optional: output args for rtmp streams (default: shown below)

|

||||

@@ -172,7 +193,6 @@ detect:

|

||||

# NOTE: Recommended value of 5. Ideally, try and reduce your FPS on the camera.

|

||||

fps: 5

|

||||

# Optional: enables detection for the camera (default: True)

|

||||

# This value can be set via MQTT and will be updated in startup based on retained value

|

||||

enabled: True

|

||||

# Optional: Number of frames without a detection before Frigate considers an object to be gone. (default: 5x the frame rate)

|

||||

max_disappeared: 25

|

||||

@@ -320,7 +340,6 @@ record:

|

||||

# NOTE: Can be overridden at the camera level

|

||||

snapshots:

|

||||

# Optional: Enable writing jpg snapshot to /media/frigate/clips (default: shown below)

|

||||

# This value can be set via MQTT and will be updated in startup based on retained value

|

||||

enabled: False

|

||||

# Optional: save a clean PNG copy of the snapshot image (default: shown below)

|

||||

clean_copy: True

|

||||

@@ -350,7 +369,7 @@ rtmp:

|

||||

enabled: False

|

||||

|

||||

# Optional: Restream configuration

|

||||

# Uses https://github.com/AlexxIT/go2rtc (v1.1.1)

|

||||

# Uses https://github.com/AlexxIT/go2rtc (v1.2.0)

|

||||

go2rtc:

|

||||

|

||||

# Optional: jsmpeg stream configuration for WebUI

|

||||

@@ -405,12 +424,12 @@ cameras:

|

||||

# Required: the path to the stream

|

||||

# NOTE: path may include environment variables, which must begin with 'FRIGATE_' and be referenced in {}

|

||||

- path: rtsp://viewer:{FRIGATE_RTSP_PASSWORD}@10.0.10.10:554/cam/realmonitor?channel=1&subtype=2

|

||||

# Required: list of roles for this stream. valid values are: detect,record,restream,rtmp

|

||||

# NOTICE: In addition to assigning the record, restream, and rtmp roles,

|

||||

# Required: list of roles for this stream. valid values are: detect,record,rtmp

|

||||

# NOTICE: In addition to assigning the record and rtmp roles,

|

||||

# they must also be enabled in the camera config.

|

||||

roles:

|

||||

- detect

|

||||

- restream

|

||||

- record

|

||||

- rtmp

|

||||

# Optional: stream specific global args (default: inherit)

|

||||

# global_args:

|

||||

@@ -483,9 +502,32 @@ ui:

|

||||

# Optional: Set the default live mode for cameras in the UI (default: shown below)

|

||||

live_mode: mse

|

||||

# Optional: Set a timezone to use in the UI (default: use browser local time)

|

||||

timezone: None

|

||||

# timezone: America/Denver

|

||||

# Optional: Use an experimental recordings / camera view UI (default: shown below)

|

||||

experimental_ui: False

|

||||

use_experimental: False

|

||||

# Optional: Set the time format used.

|

||||

# Options are browser, 12hour, or 24hour (default: shown below)

|

||||

time_format: browser

|

||||

# Optional: Set the date style for a specified length.

|

||||

# Options are: full, long, medium, short

|

||||

# Examples:

|

||||

# short: 2/11/23

|

||||

# medium: Feb 11, 2023

|

||||

# full: Saturday, February 11, 2023

|

||||

# (default: shown below).

|

||||

date_style: short

|

||||

# Optional: Set the time style for a specified length.

|

||||

# Options are: full, long, medium, short

|

||||

# Examples:

|

||||

# short: 8:14 PM

|

||||

# medium: 8:15:22 PM

|

||||

# full: 8:15:22 PM Mountain Standard Time

|

||||

# (default: shown below).

|

||||

time_style: medium

|

||||

# Optional: Ability to manually override the date / time styling to use strftime format

|

||||

# https://www.gnu.org/software/libc/manual/html_node/Formatting-Calendar-Time.html

|

||||

# possible values are shown above (default: not set)

|

||||

strftime_fmt: "%Y/%m/%d %H:%M"

|

||||

|

||||

# Optional: Telemetry configuration

|

||||

telemetry:

|

||||

|

||||

@@ -59,7 +59,7 @@ cameras:

|

||||

roles:

|

||||

- detect

|

||||

live:

|

||||

stream_name: test_cam_sub

|

||||

stream_name: rtsp_cam_sub

|

||||

```

|

||||

|

||||

### WebRTC extra configuration:

|

||||

@@ -78,6 +78,8 @@ WebRTC works by creating a TCP or UDP connection on port `8555`. However, it req

|

||||

- 192.168.1.10:8555

|

||||

- stun:8555

|

||||

```

|

||||

|

||||

- For access through Tailscale, the Frigate system's Tailscale IP must be added as a WebRTC candidate. Tailscale IPs all start with `100.`, and are reserved within the `100.0.0.0/8` CIDR block.

|

||||

|

||||

:::tip

|

||||

|

||||

@@ -97,8 +99,20 @@ However, it is recommended if issues occur to define the candidates manually. Yo

|

||||

If you are having difficulties getting WebRTC to work and you are running Frigate with docker, you may want to try changing the container network mode:

|

||||

|

||||

- `network: host`, in this mode you don't need to forward any ports. The services inside of the Frigate container will have full access to the network interfaces of your host machine as if they were running natively and not in a container. Any port conflicts will need to be resolved. This network mode is recommended by go2rtc, but we recommend you only use it if necessary.

|

||||

- `network: bridge` creates a virtual network interface for the container, and the container will have full access to it. You also don't need to forward any ports, however, the IP for accessing Frigate locally will differ from the IP of the host machine. Your router will see Frigate as if it was a new device connected in the network.

|

||||

- `network: bridge` is the default network driver, a bridge network is a Link Layer device which forwards traffic between network segments. You need to forward any ports that you want to be accessible from the host IP.

|

||||

|

||||

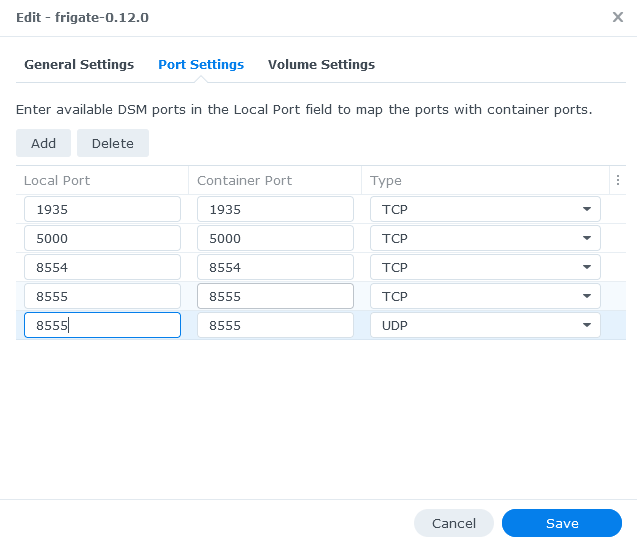

If not running in host mode, port 8555 will need to be mapped for the container:

|

||||

|

||||

docker-compose.yml

|

||||

```yaml

|

||||

services:

|

||||

frigate:

|

||||

...

|

||||

ports:

|

||||

- "8555:8555/tcp" # WebRTC over tcp

|

||||

- "8555:8555/udp" # WebRTC over udp

|

||||

```

|

||||

|

||||

:::

|

||||

|

||||

See https://github.com/AlexxIT/go2rtc#module-webrtc for more information about this.

|

||||

See [go2rtc WebRTC docs](https://github.com/AlexxIT/go2rtc/tree/v1.2.0#module-webrtc) for more information about this.

|

||||

|

||||

@@ -7,7 +7,13 @@ title: Restream

|

||||

|

||||

Frigate can restream your video feed as an RTSP feed for other applications such as Home Assistant to utilize it at `rtsp://<frigate_host>:8554/<camera_name>`. Port 8554 must be open. [This allows you to use a video feed for detection in Frigate and Home Assistant live view at the same time without having to make two separate connections to the camera](#reduce-connections-to-camera). The video feed is copied from the original video feed directly to avoid re-encoding. This feed does not include any annotation by Frigate.

|

||||

|

||||

Frigate uses [go2rtc](https://github.com/AlexxIT/go2rtc) to provide its restream and MSE/WebRTC capabilities. The go2rtc config is hosted at the `go2rtc` in the config, see [go2rtc docs](https://github.com/AlexxIT/go2rtc#configuration) for more advanced configurations and features.

|

||||

Frigate uses [go2rtc](https://github.com/AlexxIT/go2rtc/tree/v1.2.0) to provide its restream and MSE/WebRTC capabilities. The go2rtc config is hosted at the `go2rtc` in the config, see [go2rtc docs](https://github.com/AlexxIT/go2rtc/tree/v1.2.0#configuration) for more advanced configurations and features.

|

||||

|

||||

:::note

|

||||

|

||||

You can access the go2rtc webUI at `http://frigate_ip:5000/live/webrtc` which can be helpful to debug as well as provide useful information about your camera streams.

|

||||

|

||||

:::

|

||||

|

||||

### Birdseye Restream

|

||||

|

||||

@@ -124,7 +130,7 @@ cameras:

|

||||

|

||||

## Advanced Restream Configurations

|

||||

|

||||

The [exec](https://github.com/AlexxIT/go2rtc#source-exec) source in go2rtc can be used for custom ffmpeg commands. An example is below:

|

||||

The [exec](https://github.com/AlexxIT/go2rtc/tree/v1.2.0#source-exec) source in go2rtc can be used for custom ffmpeg commands. An example is below:

|

||||

|

||||

NOTE: The output will need to be passed with two curly braces `{{output}}`

|

||||

|

||||

|

||||

@@ -4,3 +4,5 @@ title: Snapshots

|

||||

---

|

||||

|

||||

Frigate can save a snapshot image to `/media/frigate/clips` for each event named as `<camera>-<id>.jpg`.

|

||||

|

||||

Snapshots sent via MQTT are configured in the [config file](https://docs.frigate.video/configuration/) under `cameras -> your_camera -> mqtt`

|

||||

|

||||

@@ -11,6 +11,24 @@ During testing, enable the Zones option for the debug feed so you can adjust as

|

||||

|

||||

To create a zone, follow [the steps for a "Motion mask"](masks.md), but use the section of the web UI for creating a zone instead.

|

||||

|

||||

### Restricting events to specific zones

|

||||

|

||||

Often you will only want events to be created when an object enters areas of interest. This is done using zones along with setting required_zones. Let's say you only want to be notified when an object enters your entire_yard zone, the config would be:

|

||||

|

||||

```yaml

|

||||

camera:

|

||||

record:

|

||||

events:

|

||||

required_zones:

|

||||

- entire_yard

|

||||

snapshots:

|

||||

required_zones:

|

||||

- entire_yard

|

||||

zones:

|

||||

entire_yard:

|

||||

coordinates: ...

|

||||

```

|

||||

|

||||

### Restricting zones to specific objects

|

||||

|

||||

Sometimes you want to limit a zone to specific object types to have more granular control of when events/snapshots are saved. The following example will limit one zone to person objects and the other to cars.

|

||||

|

||||

@@ -36,7 +36,13 @@ Fork [blakeblackshear/frigate-hass-integration](https://github.com/blakeblackshe

|

||||

- [Frigate source code](#frigate-core-web-and-docs)

|

||||

- GNU make

|

||||

- Docker

|

||||

- Extra Coral device (optional, but very helpful to simulate real world performance)

|

||||

- An extra detector (Coral, OpenVINO, etc.) is optional but recommended to simulate real world performance.

|

||||

|

||||

:::note

|

||||

|

||||

A Coral device can only be used by a single process at a time, so an extra Coral device is recommended if using a coral for development purposes.

|

||||

|

||||

:::

|

||||

|

||||

### Setup

|

||||

|

||||

@@ -79,7 +85,7 @@ Create and place these files in a `debug` folder in the root of the repo. This i

|

||||

VSCode will start the docker compose file for you and open a terminal window connected to `frigate-dev`.

|

||||

|

||||

- Run `python3 -m frigate` to start the backend.

|

||||

- In a separate terminal window inside VS Code, change into the `web` directory and run `npm install && npm start` to start the frontend.

|

||||

- In a separate terminal window inside VS Code, change into the `web` directory and run `npm install && npm run dev` to start the frontend.

|

||||

|

||||

#### 5. Teardown

|

||||

|

||||

|

||||

@@ -3,7 +3,7 @@ id: camera_setup

|

||||

title: Camera setup

|

||||

---

|

||||

|

||||

Cameras configured to output H.264 video and AAC audio will offer the most compatibility with all features of Frigate and Home Assistant. H.265 has better compression, but far less compatibility. Safari and Edge are the only browsers able to play H.265. Ideally, cameras should be configured directly for the desired resolutions and frame rates you want to use in Frigate. Reducing frame rates within Frigate will waste CPU resources decoding extra frames that are discarded. There are three different goals that you want to tune your stream configurations around.

|

||||

Cameras configured to output H.264 video and AAC audio will offer the most compatibility with all features of Frigate and Home Assistant. H.265 has better compression, but less compatibility. Chrome 108+, Safari and Edge are the only browsers able to play H.265 and only support a limited number of H.265 profiles. Ideally, cameras should be configured directly for the desired resolutions and frame rates you want to use in Frigate. Reducing frame rates within Frigate will waste CPU resources decoding extra frames that are discarded. There are three different goals that you want to tune your stream configurations around.

|

||||

|

||||

- **Detection**: This is the only stream that Frigate will decode for processing. Also, this is the stream where snapshots will be generated from. The resolution for detection should be tuned for the size of the objects you want to detect. See [Choosing a detect resolution](#choosing-a-detect-resolution) for more details. The recommended frame rate is 5fps, but may need to be higher for very fast moving objects. Higher resolutions and frame rates will drive higher CPU usage on your server.

|

||||

|

||||

|

||||

@@ -23,15 +23,11 @@ I may earn a small commission for my endorsement, recommendation, testimonial, o

|

||||

|

||||

My current favorite is the Minisforum GK41 because of the dual NICs that allow you to setup a dedicated private network for your cameras where they can be blocked from accessing the internet. There are many used workstation options on eBay that work very well. Anything with an Intel CPU and capable of running Debian should work fine. As a bonus, you may want to look for devices with a M.2 or PCIe express slot that is compatible with the Google Coral. I may earn a small commission for my endorsement, recommendation, testimonial, or link to any products or services from this website.

|

||||

|

||||

| Name | Coral Inference Speed | Coral Compatibility | Notes |

|

||||

| ----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | --------------- | ------------------- | --------------------------------------------------------------------------------------------------------------------------------------- |

|

||||

| Odyssey X86 Blue J4125 (<a href="https://amzn.to/3oH4BKi" target="_blank" rel="nofollow noopener sponsored">Amazon</a>) (<a href="https://www.seeedstudio.com/Frigate-NVR-with-Odyssey-Blue-and-Coral-USB-Accelerator.html?utm_source=Frigate" target="_blank" rel="nofollow noopener sponsored">SeeedStudio</a>) | 9-10ms | M.2 B+M, USB | Dual gigabit NICs for easy isolated camera network. Easily handles several 1080p cameras. |

|

||||

| Minisforum GK41 (<a href="https://amzn.to/3ptnb8D" target="_blank" rel="nofollow noopener sponsored">Amazon</a>) | 9-10ms | USB | Dual gigabit NICs for easy isolated camera network. Easily handles several 1080p cameras. |

|

||||

| Beelink GK55 (<a href="https://amzn.to/35E79BC" target="_blank" rel="nofollow noopener sponsored">Amazon</a>) | 9-10ms | USB | Dual gigabit NICs for easy isolated camera network. Easily handles several 1080p cameras. |

|

||||

| Intel NUC (<a href="https://amzn.to/3psFlHi" target="_blank" rel="nofollow noopener sponsored">Amazon</a>) | 8-10ms | USB | Overkill for most, but great performance. Can handle many cameras at 5fps depending on typical amounts of motion. Requires extra parts. |

|

||||

| BMAX B2 Plus (<a href="https://amzn.to/3a6TBh8" target="_blank" rel="nofollow noopener sponsored">Amazon</a>) | 10-12ms | USB | Good balance of performance and cost. Also capable of running many other services at the same time as Frigate. |

|

||||

| Atomic Pi (<a href="https://amzn.to/2YjpY9m" target="_blank" rel="nofollow noopener sponsored">Amazon</a>) | 16ms | USB | Good option for a dedicated low power board with a small number of cameras. Can leverage Intel QuickSync for stream decoding. |

|

||||

| Raspberry Pi 4 (64bit) (<a href="https://amzn.to/2YhSGHH" target="_blank" rel="nofollow noopener sponsored">Amazon</a>) | 10-15ms | USB | Can handle a small number of cameras. |

|

||||

| Name | Coral Inference Speed | Coral Compatibility | Notes |

|

||||

| ----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | --------------------- | ------------------- | --------------------------------------------------------------------------------------------------------------------------------------- |

|

||||

| Odyssey X86 Blue J4125 (<a href="https://amzn.to/3oH4BKi" target="_blank" rel="nofollow noopener sponsored">Amazon</a>) (<a href="https://www.seeedstudio.com/Frigate-NVR-with-Odyssey-Blue-and-Coral-USB-Accelerator.html?utm_source=Frigate" target="_blank" rel="nofollow noopener sponsored">SeeedStudio</a>) | 9-10ms | M.2 B+M, USB | Dual gigabit NICs for easy isolated camera network. Easily handles several 1080p cameras. |

|

||||

| Minisforum GK41 (<a href="https://amzn.to/3ptnb8D" target="_blank" rel="nofollow noopener sponsored">Amazon</a>) | 9-10ms | USB | Dual gigabit NICs for easy isolated camera network. Easily handles several 1080p cameras. |

|

||||

| Intel NUC (<a href="https://amzn.to/3psFlHi" target="_blank" rel="nofollow noopener sponsored">Amazon</a>) | 8-10ms | USB | Overkill for most, but great performance. Can handle many cameras at 5fps depending on typical amounts of motion. Requires extra parts. |

|

||||

|

||||

## Detectors